EU AI Act 2025 - A Eight Step Compliance Roadmap

Introduction

On 2 August 2025 the EU AI Act’s new requirements for General-Purpose AI models (GPAI) become enforceable. From that day forward, developers, distributors and operators of AI whose outputs reach EU users must prove transparency, documentation and security — or risk fines of up to €35 million or 7 percent of global turnover. This guide walks you through a fast track to compliance-ready, explains why even Swiss companies are in scope, and lists the must-ask questions when selecting an AI provider. Compliance, done right, turns from cost centre into a powerful trust and revenue lever.

For a printable 8-step checklist and self-assessment scorecard, download our free EU AI Act guide.

Why Second of August 2025 is a Hard Deadline

The Act entered into force in August 2024, but

penalties are only activated for GPAI models on 2 August 2025. From that moment, regulators can impose fines of up to €35 million or 7 % of global revenue for non-compliance.

Who is Affected - Even Outside the EU?

The Act entered into force in August 2024, but

penalties are only activated for GPAI models on 2 August 2025. From that moment, regulators can impose fines of up to €35 million or 7 % of global revenue for non-compliance.

"Swiss Angle: A Swiss SME falls under the Act whenever its AI outputs have effect inside the EU, e.g. an HR bot scoring candidates for an EU job, an export machine using AI-vision inside an EU plant, or a SaaS tool serving EU customers."

Source: https://www.lexr.com/en-ch/blog/regulatory-updates-for-startups-in-2025/

The Four Risk Classes at a Glance

| Class | Typical examples | Practical takeaway |

|---|---|---|

| Unacceptable | Real-time emotion detection on employees | Totally prohibited |

| High risk | HR screening, safety-critical machine vision | CE-like conformity, strict audits |

| Limited risk | Chat & voice bots | Must disclose "AI Assistant" (Art. 50) |

| Minimal risk | Generic office automations | Voluntary code of conduct |

Need a step-by-step plan after seeing the risk tiers?

The matrix shows what the law demands—our concise EU AI Act Compliance Guide explains how and when to act:

- 8-step roadmap that maps every legal article to a concrete task

- 5-minute self-assessment scorecard

- Editable Excel template for your AI inventory and risk log

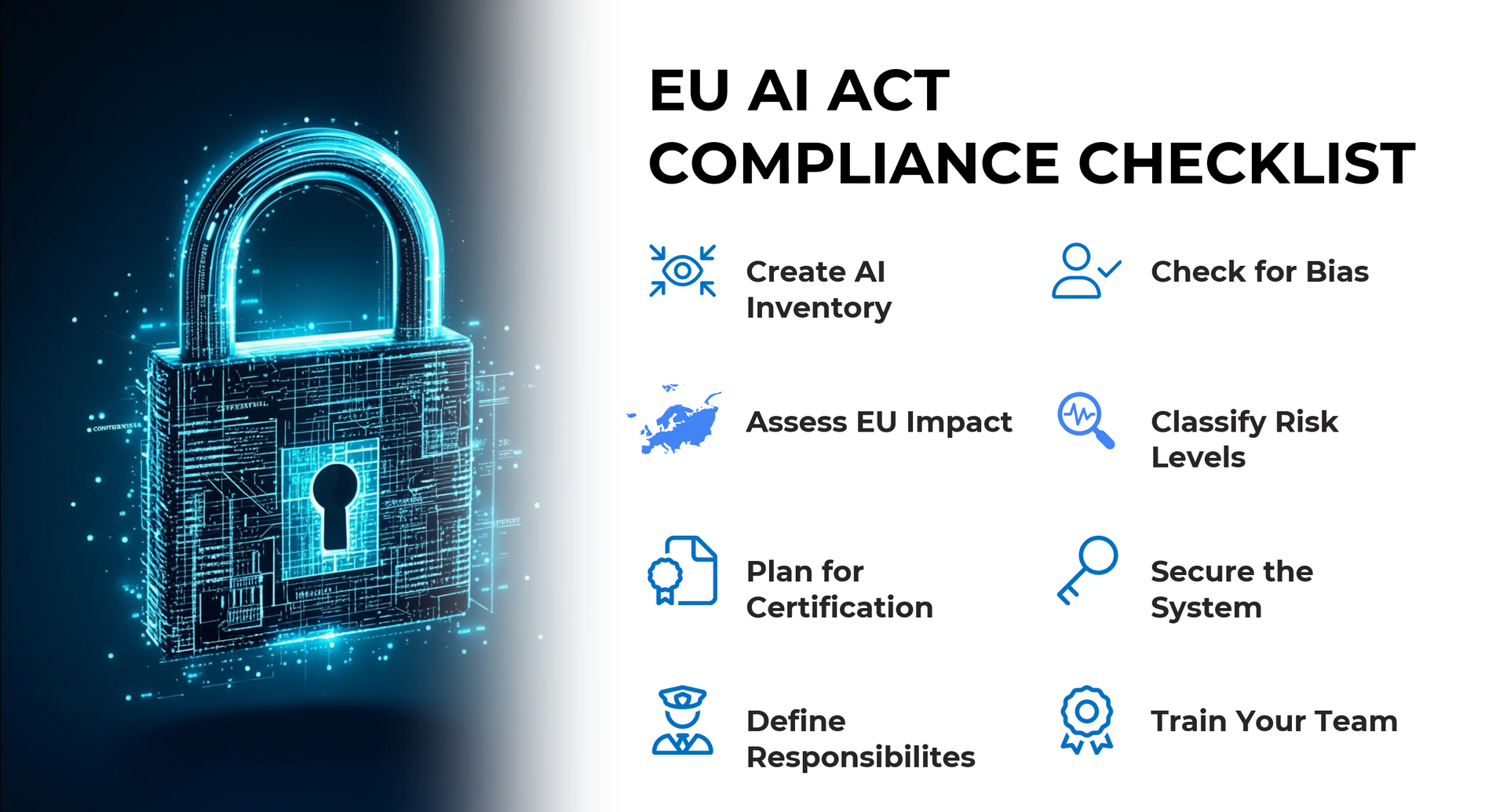

The 8-Step Fast-Track to Compliance

- Build an AI inventory – list every model and use case.

- Assess EU impact – do outputs reach EU users? Extraterritoriality matters.

- Classify the risk – apply the four-step matrix above.

- Plan for certification - prepare documentation, transparency notices and (for high-risk) a conformity roadmap.

- Check for Bias - run quantitative fairness tests, record results and set up continuous monitoring.

- Secure system & logs – data quality checks, cyber-hardening, tamper-proof audit logs.

- Train your team – role-based AI-literacy; mandatory evidence since 2 Feb 2025.

- Define Responsibilities – appoint an AI officer or establish clear reporting lines (Art. 4).

Reality check: Can you produce, in under five minutes, the risk class, data provenance and last audit log for each model? If not, start at Step 1.

Key Take-Aways from our Webinars on the EU AI Act

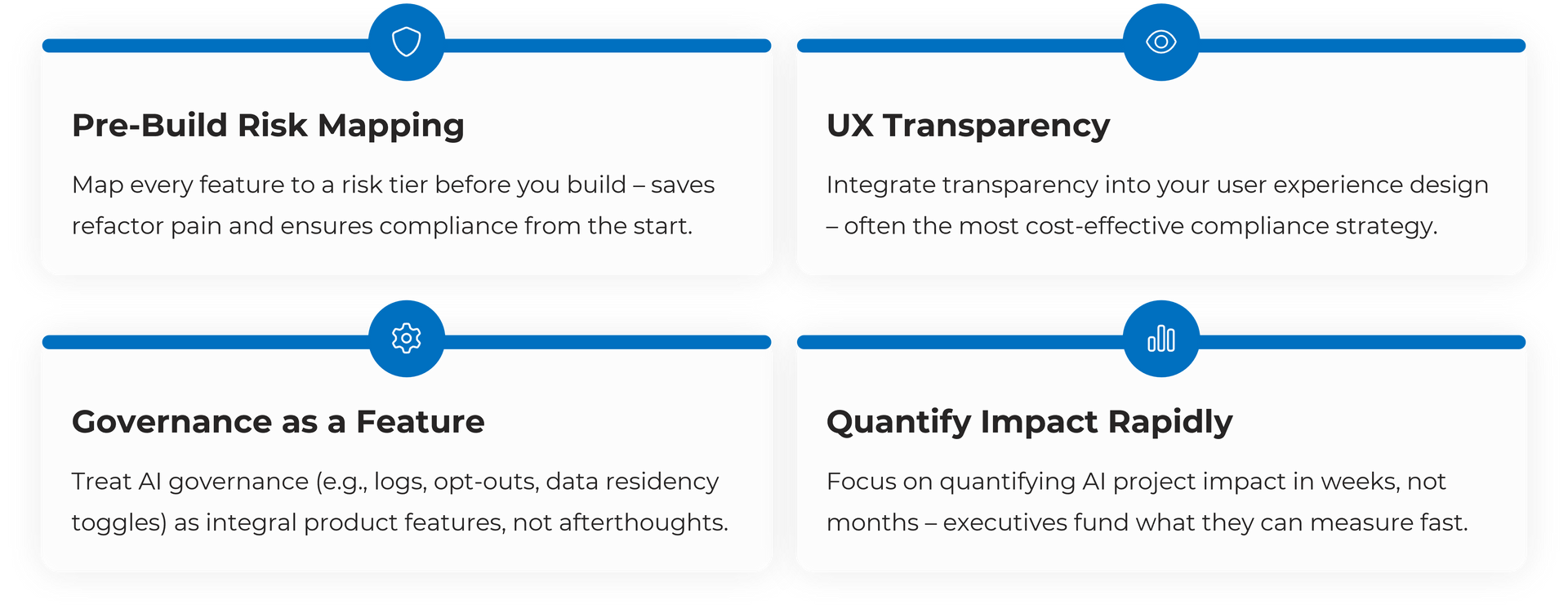

1) Pre-build risk mapping avoids costly re-factorings later.

2) UX transparency beats fines – a simple “AI Assistant” badge often fulfils Art. 50 for limited-risk bots.

3) Governance sells – audit logs, opt-outs and data-residency switches are not red tape; they win customer trust.

Vendor-Selection Checklist and How dreamleap Measures up

5 Must-Ask Questions for vendor selection

- Is the supplier compliant with AI model-building regulations?

- Does the supplier provide comprehensive documentation of data / models?

- Built-in bias & robustness monitoring?

- Tamper-proof audit logs?

- AI-Literacy training for your teams?

Conclusion

The countdown is on. A clear eight-step programme — plus a partner who ticks the Model Card, CoP and AI-literacy boxes — turns 2 August 2025 from a compliance scare into a launchpad for trustworthy, scalable AI.

- ➜ Download the EU AI Act Compliance Guide

- ➜ Get in touch for the webinar – full Q&A and slides

- ➜ Book a Use-Case Ideation Workshop – map your processes to the AI-Act

dreamleap guides you from first risk scan to audit-ready report - so you can innovate with confidence.

Why is dreamleap AI used by many SMBs?

dreamleap has an intelligent retrieval mechanism based on LLMs, RAG, Agentic components and advanced AI engineering models and mechanism that do not process data outside the EU.

dreamleap already provides many top AI agents out of the box, with a strong focus on business value, enablement, data compliance, and security, combined with flexible hosting options and affordable pricing for SMBs.

What about Microsoft Copilot vs. ChatGPT OpenAI?

MS Copilot is providing more safety regarding data processing for EU companies, however, with "Microsoft 365 Copilot calls to the LLM are routed to the closest data centers in the region, but also can call into other regions where capacity is available during high utilization periods." Please find all important information here: https://learn.microsoft.com/en-us/copilot/microsoft-365/microsoft-365-copilot-privacy

Please find a great overview here: https://www.rosenthal.ch/downloads/VISCHER_ki-tools-03-25.pdf

What do I have to watch out when evaluating AI providers?

First, always check with a provider whether they use the OpenAI GPT API directly. If they do, your data may be at risk, according to the new retention changes.

Second, verify whether providers build their AI solutions on top of existing platforms (such as Crew AI, n8n, Make, or Lovable). If so, clarify whether these tools are approved for use within your organization. Many of them process your data externally, proactively need access to your data, which may not be appropriate for highly sensitive information.

Third, if you need to use internal data for your AI applications, ensure that you work with a provider like creamleap, which offers dedicated models hosted in your own cloud or on-premises environment.

If you're an SMB or working with non-sensitive data (i.e., no strategic, personal identifiable information (PII), or confidential content), and if the data may be processed outside the EU or accessed by the tool itself, using ChatGPT or similar services can be acceptable.

dreamleap is ideal if you want maximum security and flexibility to integrate with your own data, even alongside third-party tools, while maintaining full control.

Glossary & Further Reading

| Term | Short definition |

|---|---|

| GPAI (General-Purpose AI model) | A broadly trained model adaptable to many downstream tasks; subject to Art. 53-55 transparency duties. |

| Risk-class matrix | Four legal tiers—Unacceptable, High, Limited, Minimal—determining which controls apply. |

| Model Card | Standard “passport” outlining a model’s purpose, data provenance, metrics, limitations and ethical risks (required for GPAI). |

| Code of Practice (CoP) | Voluntary—but expected—baseline controls GPAI providers sign to demonstrate good practice (Art. 54). |

| Conformity assessment | CE-style procedure (testing, documentation, notified body) mandatory for High-Risk AI systems before market launch. |

| AI Literacy (Art. 4) | Proof that staff are trained in safe-and-ethical AI use; obligatory since 2 Feb 2025. |

| Bias & Fairness metrics | Quantitative tests (e.g., demographic parity, Equalized Odds) that reveal systematic performance gaps. |

| Tamper-proof audit logs | Immutable, time-stamped records of model calls, prompts and outputs—enforceable for systemic GPAI (Art. 55). |

| Incident reporting | 72-hour notification to the EU AI Office when an AI-related safety or rights breach occurs (Art. 62). |

| AI Officer | Designated person responsible for risk register, compliance evidence and regulator liaison. |

| Guardrails | Technical and policy controls (prompt filters, PII masking, rate limits) that prevent unsafe or non-compliant model outputs. |

| Extraterritoriality | Principle that non-EU entities are bound if their AI outputs are used inside the EU single market. |